Recent searches

Search options

#Gemma 3 Released with #Multilingual & #Multimodal Capabilities

Blog: https://developers.googleblog.com/en/introducing-gemma3/

Video: https://youtu.be/UU13FN2Xpyw

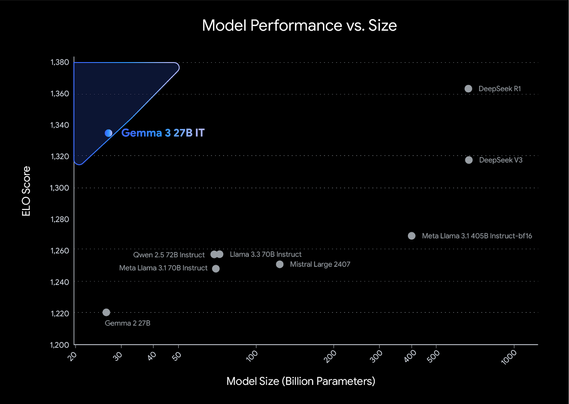

Model outperforms larger competitors with ELO score of 1338 while maintaining compact 27B size. Available in four sizes (1B, 4B, 12B, 27B) as pre-trained and instruction-tuned versions. Multimodal capabilities support vision-language tasks with SigLIP encoder.

Supports 140+ languages with improved multilingual tokenizer

Multimodal capabilities with integrated SigLIP vision encoder for image and video input

Enhanced reasoning, math, and coding with RLHF, RLMF, and RLEF training

Expanded context window of 128k tokens for complex tasks

Achieves 1338 LMArena score, making it the top compact open model

Available in 1B, 4B, 12B, and 27B parameter sizes

Compatible with Gemma 2 dialog format for easy integration

Pre-trained models trained on 2-14T tokens using Google TPUs and JAX

Includes ShieldGemma 2, a specialized 4B image safety classifier

Download today via AI Studio, Hugging Face, Ollama, Vertex AI, or your favorite OS tools.